OpenAI's 2024 Developer Event: Easier Voice Assistant Creation

Table of Contents

Streamlined Development Processes with New APIs

OpenAI's 2024 event significantly simplifies voice assistant creation by introducing powerful new APIs. These APIs abstract away much of the underlying complexity of natural language understanding (NLU), speech-to-text (STT), and text-to-speech (TTS), allowing developers to focus on the unique aspects of their applications. This streamlined approach drastically reduces development time and resources.

-

Reduced code complexity: Developers can achieve the same functionality with significantly less code, freeing them to innovate and iterate more quickly. Instead of writing hundreds of lines of code to handle speech recognition, they can now use concise API calls.

-

Improved integration with existing platforms: The new APIs are designed for seamless integration with popular development frameworks and platforms, making it easy to incorporate voice assistant capabilities into existing projects. This reduces the need for extensive platform-specific adaptations.

-

Access to pre-trained models for faster development: OpenAI provides access to highly accurate, pre-trained models for NLU, STT, and TTS. This eliminates the need to train models from scratch, drastically shortening development cycles. These models are constantly being updated, ensuring your voice assistant always benefits from the latest advancements in NLP.

-

Examples of simplified API calls and code snippets: For instance, instead of writing complex algorithms for intent recognition, developers can use a single API call like

openai.voice.recognize(audio_file)to get transcription and intent classification results. OpenAI's documentation will provide extensive code examples and tutorials for easy implementation.

Enhanced Speech Recognition and Synthesis Capabilities

The accuracy and naturalness of speech recognition and synthesis are crucial for a positive user experience. OpenAI has made significant strides in both areas, resulting in a more seamless and intuitive interaction for users.

-

Improved accuracy in noisy environments: The new speech recognition models show remarkable improvements in accuracy, even in environments with background noise. This expands the potential use cases for voice assistants, making them more practical in real-world settings.

-

Support for multiple languages and accents: OpenAI's updated models now support a wider range of languages and accents, making voice assistants accessible to a global audience. This significantly broadens the market reach for developers.

-

Enhanced naturalness and expressiveness of synthesized speech: Synthesized speech is now more human-sounding and expressive. This improved naturalness creates a more engaging and comfortable user experience, which is vital for widespread adoption.

-

Discussion of any new models or algorithms introduced: OpenAI is expected to unveil new models based on cutting-edge advancements in Natural Language Processing (NLP) and deep learning techniques that dramatically improve performance across various aspects of voice processing.

Pre-built Modules for Common Voice Assistant Features

OpenAI is delivering pre-built modules for common voice assistant features, further accelerating the development process. These modules handle complex tasks, enabling developers to focus on the unique value proposition of their applications.

-

Intent recognition modules: Pre-built modules for intent recognition accurately interpret user requests, enabling the voice assistant to understand what the user wants to do.

-

Dialogue management modules: These modules handle the flow of conversation, ensuring a smooth and natural interaction with the user. This simplifies the creation of complex conversational flows.

-

Integration with popular smart home devices: OpenAI's modules offer straightforward integration with leading smart home platforms, allowing developers to easily incorporate voice control into existing smart home ecosystems.

-

Examples of easily integrated features and their benefits: For example, a developer can easily add smart home control by integrating a pre-built module that handles commands such as "turn off the lights" or "set the thermostat to 72 degrees".

Improved Training and Documentation Resources

OpenAI recognizes the importance of robust training and support for developers. The 2024 event includes significant improvements to the resources available to help developers learn and successfully utilize these new tools.

-

Comprehensive documentation and tutorials: OpenAI is providing extensive, well-structured documentation and step-by-step tutorials to guide developers through the process of integrating the new APIs and modules.

-

Access to online communities and support forums: Developers can connect with each other and OpenAI experts through vibrant online communities and support forums. This provides a collaborative environment to share knowledge and troubleshoot issues.

-

Detailed examples and case studies: Real-world examples and case studies illustrate the practical applications of the new tools, showing developers how to achieve specific functionalities.

-

Improved developer tools and debugging capabilities: OpenAI is investing in improved developer tools that provide better debugging capabilities and streamline the testing process, helping developers identify and resolve issues quickly and efficiently.

Conclusion

OpenAI's 2024 developer event marks a significant step forward in simplifying voice assistant creation. The new APIs, enhanced speech capabilities, pre-built modules, and improved resources empower developers to build more sophisticated and user-friendly voice assistants with significantly less effort. Don't miss the opportunity to leverage these advancements – explore OpenAI's developer resources and start building your next-generation voice assistant today!

Featured Posts

-

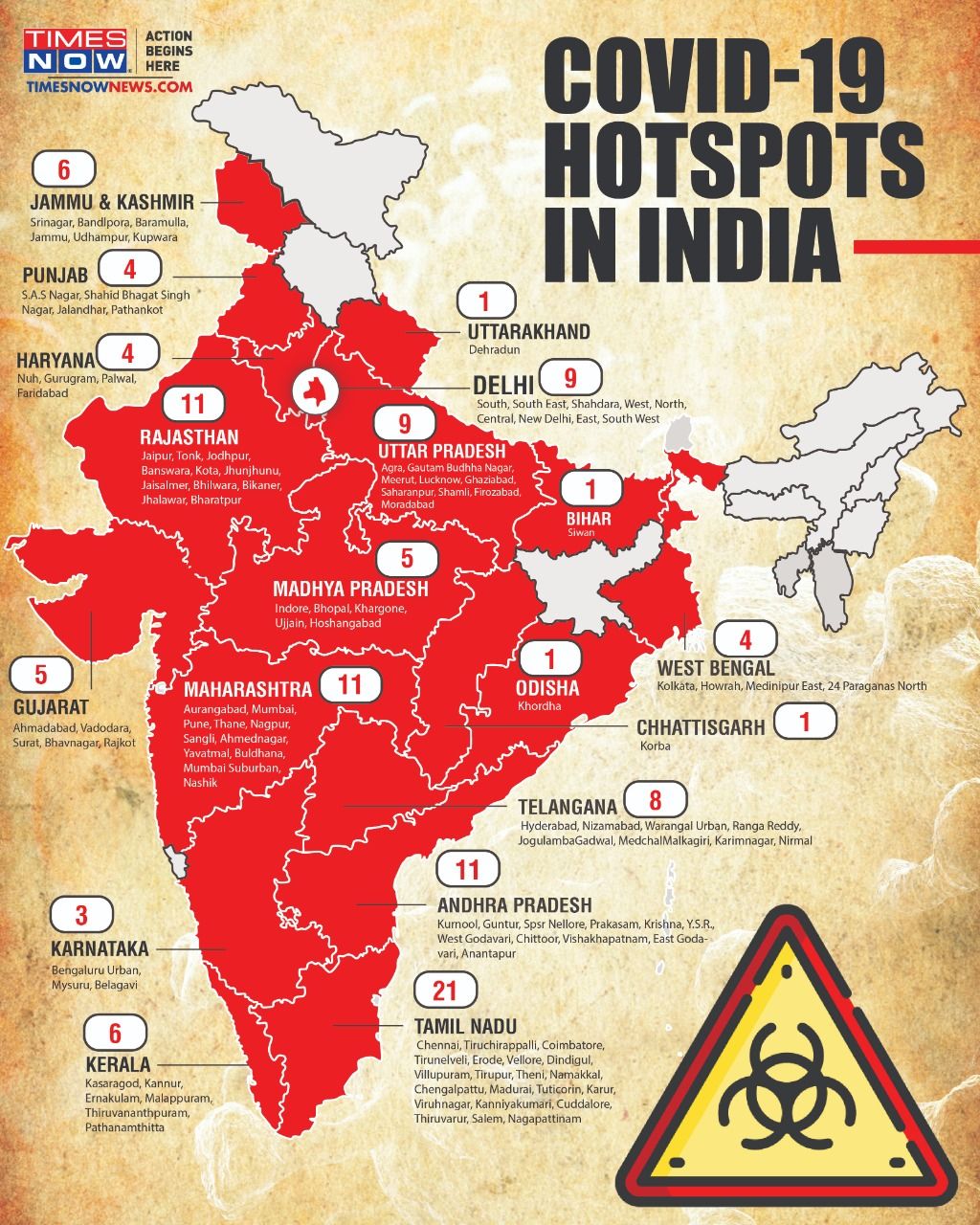

New Business Hotspots Across The Country An Interactive Map

Apr 22, 2025

New Business Hotspots Across The Country An Interactive Map

Apr 22, 2025 -

Ev Mandate Faces Renewed Opposition From Car Dealers

Apr 22, 2025

Ev Mandate Faces Renewed Opposition From Car Dealers

Apr 22, 2025 -

Cybercriminal Makes Millions Targeting Executive Office365 Accounts

Apr 22, 2025

Cybercriminal Makes Millions Targeting Executive Office365 Accounts

Apr 22, 2025 -

Ukraine Conflict Russia Resumes Offensive After Easter Ceasefire

Apr 22, 2025

Ukraine Conflict Russia Resumes Offensive After Easter Ceasefire

Apr 22, 2025 -

Enhanced Security Partnership China And Indonesias Growing Collaboration

Apr 22, 2025

Enhanced Security Partnership China And Indonesias Growing Collaboration

Apr 22, 2025