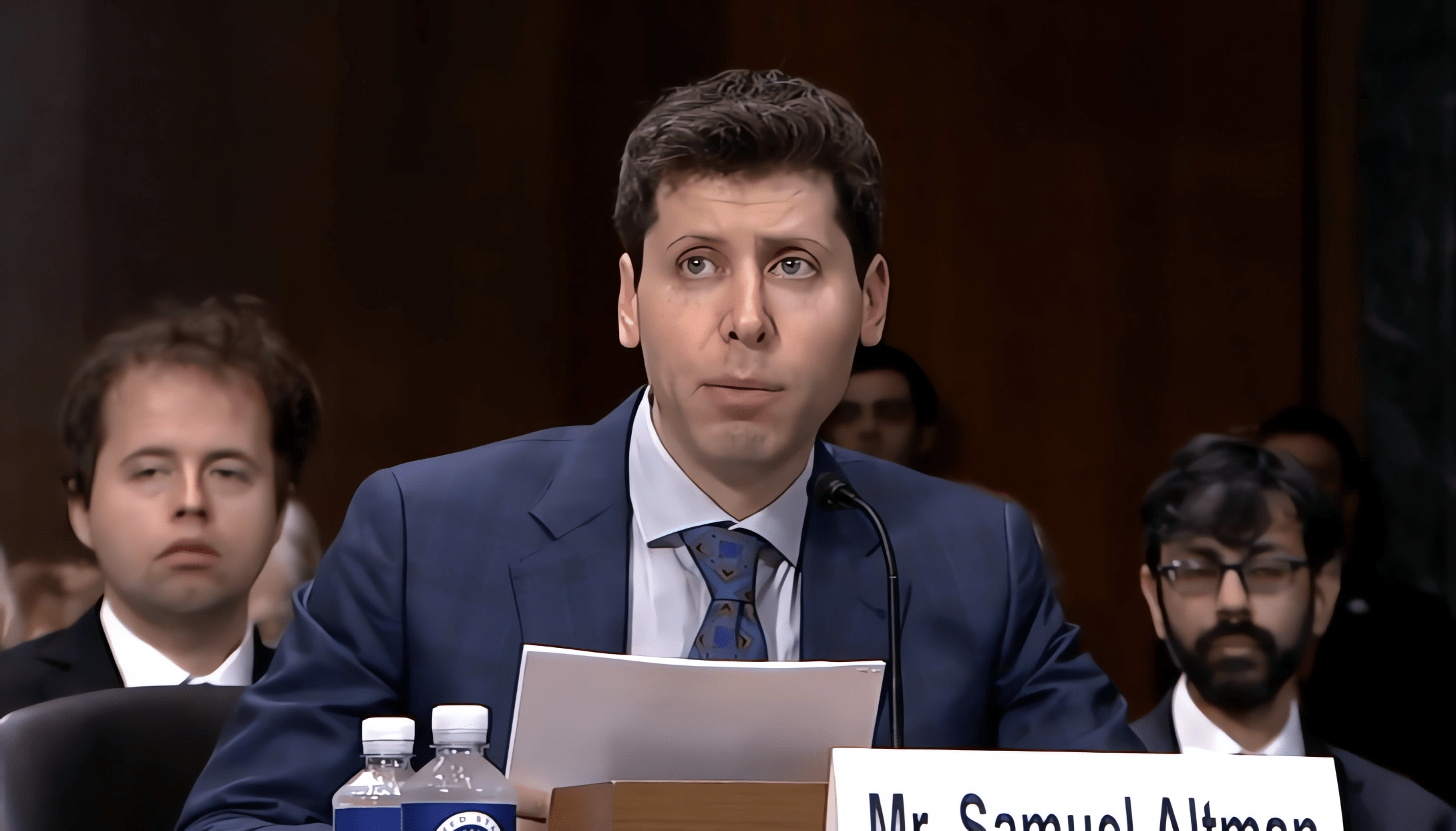

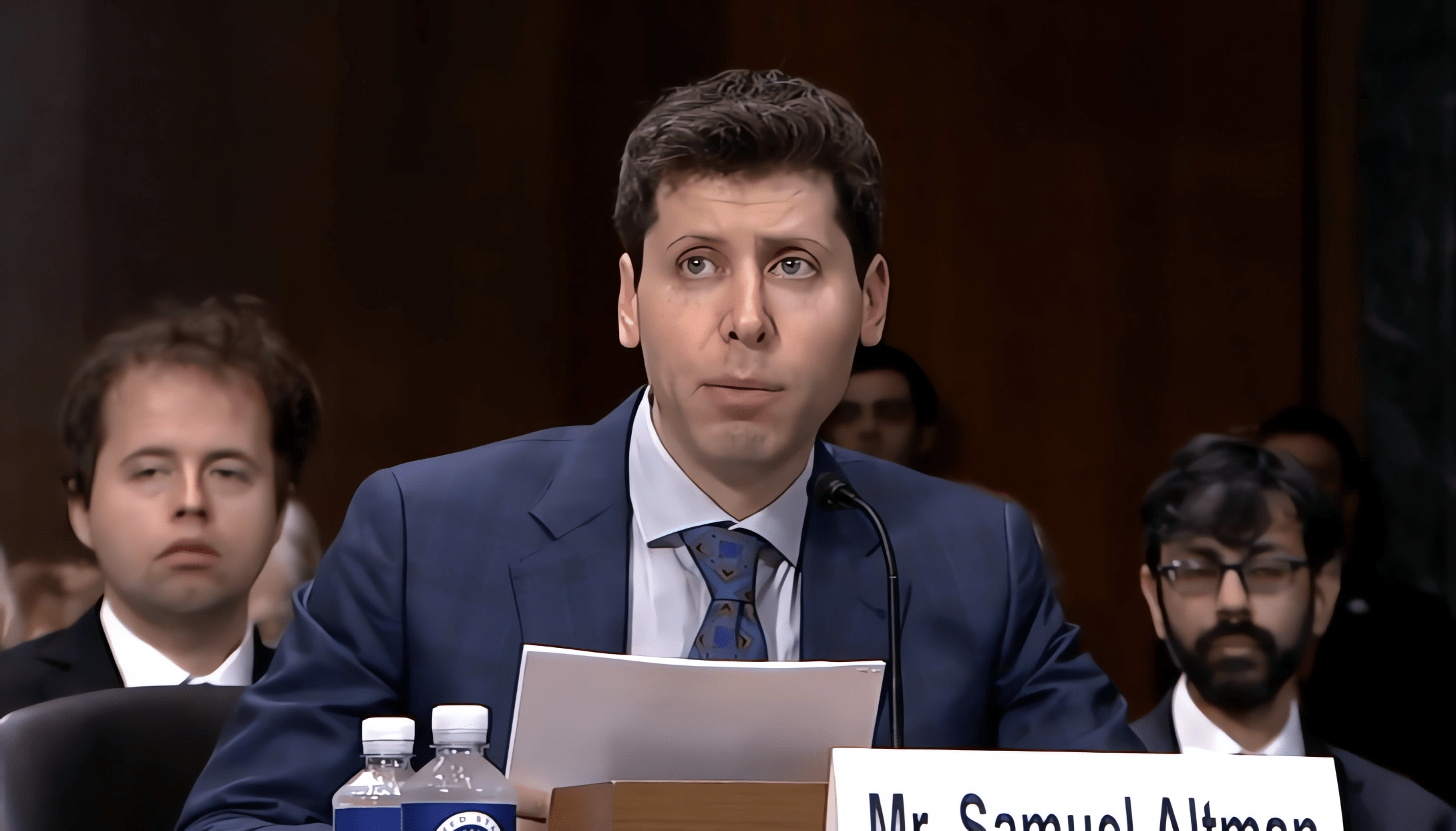

FTC Investigates OpenAI's ChatGPT: What It Means For AI

Table of Contents

The FTC's Concerns Regarding ChatGPT and AI

The FTC's investigation into ChatGPT stems from several key concerns related to the potential risks posed by powerful AI systems. These concerns are not unique to ChatGPT but highlight the broader challenges inherent in the rapid advancement of AI technology.

Data Privacy and Security

The FTC is likely scrutinizing ChatGPT's data handling practices, focusing on potential violations of consumer privacy laws. Concerns center around several key areas:

- Potential violations of consumer privacy laws: The collection and use of personal data for training the model without explicit consent could breach existing regulations like the CCPA (California Consumer Privacy Act) and GDPR (General Data Protection Regulation).

- Unauthorized data collection: The model's training data might include sensitive personal information scraped from the internet without proper authorization.

- Lack of transparency in data usage: Users may not fully understand how their data is being used to train and improve the AI model.

- Vulnerability to data breaches: The vast datasets used to train LLMs like ChatGPT represent a significant target for malicious actors, increasing the risk of data breaches and identity theft.

These concerns underscore the critical need for enhanced data protection measures within AI systems and the importance of building user trust through transparency and accountability. The ability to ensure data privacy and security is crucial for the responsible development of AI technology.

Algorithmic Bias and Discrimination

A major concern surrounding AI models like ChatGPT is the potential for algorithmic bias. The data used to train these models often reflects existing societal biases, leading to discriminatory outputs.

- Examples of biased outputs: ChatGPT might generate responses that perpetuate stereotypes based on race, gender, religion, or other protected characteristics.

- The difficulty in detecting and mitigating bias: Identifying and eliminating bias from massive datasets is a significant technical challenge.

- The ethical implications of deploying biased AI: Deploying biased AI systems can exacerbate existing inequalities and lead to unfair or discriminatory outcomes in various applications.

Addressing algorithmic bias requires rigorous data auditing, algorithmic fairness techniques, and ongoing monitoring to ensure that AI systems treat all users equitably. The development of fairer and more inclusive AI is paramount.

Misinformation and Malicious Use

ChatGPT's ability to generate human-quality text raises concerns about its potential for misuse in spreading misinformation and creating harmful content.

- Deepfakes: The model can be used to generate realistic but fake videos and audio recordings, potentially damaging reputations or manipulating public opinion.

- Spread of propaganda: ChatGPT could be exploited to generate convincing but false narratives, contributing to the spread of propaganda and disinformation.

- Generation of malicious code: The model's capabilities could be leveraged by malicious actors to create sophisticated phishing emails, malware, or other harmful code.

- Impersonation: ChatGPT could be used to impersonate individuals or organizations, potentially leading to scams or fraud.

The challenge lies in developing mechanisms to detect and mitigate the malicious use of AI while preserving its legitimate applications. This requires a collaborative effort between AI developers, policymakers, and the broader community.

Potential Consequences for OpenAI

The FTC investigation could have significant consequences for OpenAI, potentially impacting its operations, finances, and reputation.

Financial Penalties

OpenAI faces the possibility of substantial fines and legal repercussions if the FTC finds evidence of violations of consumer protection laws or other regulations. These penalties could significantly impact the company's financial stability.

Operational Restrictions

The FTC might impose restrictions on OpenAI's operations, limiting its data collection practices, model development, or deployment of AI systems until stricter safeguards are implemented.

Reputational Damage

The investigation itself could damage OpenAI's reputation, impacting its brand image, investor confidence, and its ability to attract and retain talent. This damage could have long-term repercussions for the company's growth and success.

Broader Implications for the AI Industry

The FTC's investigation into OpenAI’s ChatGPT has significant implications for the broader AI industry, signaling a potential paradigm shift in how AI is developed, regulated, and deployed.

Increased Regulatory Scrutiny

This investigation is likely to trigger increased regulatory scrutiny of AI development and deployment globally. We can expect more stringent regulations and oversight to ensure responsible AI practices.

Accelerated Development of Ethical Guidelines

The investigation underscores the urgent need for the AI industry to develop and adopt stronger ethical guidelines and responsible AI practices. This includes establishing clear standards for data privacy, algorithmic fairness, and the mitigation of potential harms.

Impact on AI Innovation

While increased regulation might seem to stifle innovation, it could also foster a more responsible and sustainable approach to AI development. A focus on ethical considerations can ultimately lead to more trustworthy and beneficial AI systems.

Conclusion

The FTC's investigation into OpenAI's ChatGPT represents a crucial turning point in the evolution of AI. The concerns raised regarding data privacy, algorithmic bias, and the potential for misuse emphasize the urgent need for ethical guidelines and robust regulatory frameworks. The consequences for OpenAI will serve as a precedent for other AI developers, underscoring the critical importance of responsible AI development and deployment. The future of AI hinges on proactively addressing these challenges and fostering collaboration between developers, policymakers, and the public to ensure that this transformative technology benefits humanity.

Call to Action: Stay informed about the FTC investigation into OpenAI's ChatGPT and the evolving landscape of AI regulation. Understanding these developments is crucial for navigating the complexities of this transformative technology. Learn more about responsible AI development and advocate for ethical AI practices to shape a future where AI serves humanity's best interests.

Featured Posts

-

Us And South Sudan Partner To Manage Deportees Repatriation Process

Apr 22, 2025

Us And South Sudan Partner To Manage Deportees Repatriation Process

Apr 22, 2025 -

How Is A New Pope Chosen A Comprehensive Guide To Papal Conclaves

Apr 22, 2025

How Is A New Pope Chosen A Comprehensive Guide To Papal Conclaves

Apr 22, 2025 -

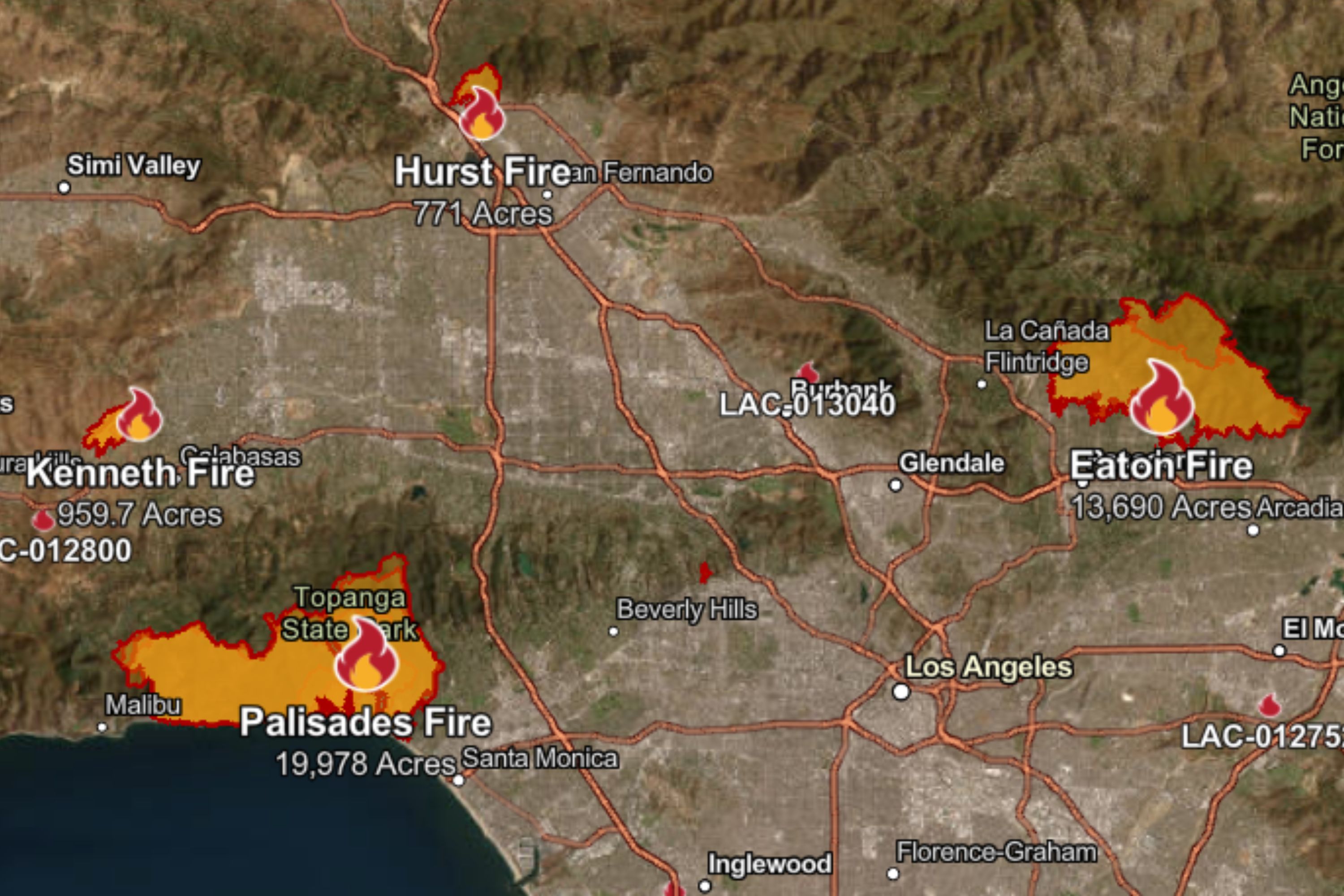

Full List Celebrities Affected By The Palisades Fires In Los Angeles

Apr 22, 2025

Full List Celebrities Affected By The Palisades Fires In Los Angeles

Apr 22, 2025 -

Trump Administration Escalates Harvard Funding Dispute Threatening 1 Billion

Apr 22, 2025

Trump Administration Escalates Harvard Funding Dispute Threatening 1 Billion

Apr 22, 2025 -

T Mobiles 16 Million Data Breach Fine Three Years Of Security Failures

Apr 22, 2025

T Mobiles 16 Million Data Breach Fine Three Years Of Security Failures

Apr 22, 2025