Build Voice Assistants Easily With OpenAI's New Tools

Table of Contents

Understanding OpenAI's Role in Simplifying Voice Assistant Development

OpenAI's contributions to natural language processing (NLP) and speech recognition have dramatically altered the landscape of voice assistant development. Traditionally, building a voice assistant required extensive expertise in multiple areas, including acoustic modeling, speech recognition, natural language understanding, and dialogue management. OpenAI's readily available APIs and pre-trained models significantly reduce this complexity.

Key APIs and tools from OpenAI crucial for voice assistant creation include:

-

Whisper: OpenAI's Whisper API provides robust and accurate speech-to-text capabilities, converting spoken language into text for processing. This eliminates the need to build your own speech recognition engine, saving significant development time and effort.

-

GPT models (GPT-3, GPT-4, etc.): These powerful language models are at the heart of natural language understanding and response generation. They enable your voice assistant to interpret user requests, generate meaningful responses, and engage in natural-sounding conversations.

The advantages of using OpenAI's tools are numerous:

- Simplified API access for easier integration: The APIs are designed for intuitive use, minimizing the technical hurdles for developers.

- Pre-trained models for faster development: You can leverage pre-trained models, eliminating the need to train models from scratch, greatly accelerating development cycles.

- Improved accuracy and natural language understanding: OpenAI's models boast high accuracy in speech-to-text and natural language understanding, leading to a more seamless user experience.

- Cost-effective solutions compared to traditional methods: Using OpenAI's services can be more cost-effective than building and maintaining your own infrastructure and models.

Step-by-Step Guide: Building a Basic Voice Assistant with OpenAI

Let's outline a basic process for constructing a simple voice assistant. Remember, this is a simplified example; real-world implementations often require more advanced techniques.

1. Setting up the environment: You'll need an OpenAI API key and appropriate libraries for your chosen programming language (Python is commonly used).

2. Speech-to-text using Whisper: Use the Whisper API to transcribe audio input from a microphone or audio file into text. This step involves sending audio data to the API and receiving the transcribed text as output.

Example (Python - conceptual):

import openai # Replace with your actual API call and handling

audio_file = "audio.wav"

transcript = openai.transcribe(audio_file)

print(transcript)

3. Natural language understanding and response generation: Feed the transcribed text to an OpenAI language model (like GPT-3 or GPT-4) to understand the user's intent and generate an appropriate response.

Example (Python - conceptual):

response = openai.complete(prompt=transcript, model="text-davinci-003") # Replace with appropriate model

print(response)

4. Text-to-speech for output: Convert the generated text response back into speech using a text-to-speech library or API (e.g., Google Cloud Text-to-Speech, Amazon Polly).

5. Integration: Combine these steps to create a basic workflow: receive audio input, transcribe it, process it with the language model, and convert the response to speech.

- Code examples: More comprehensive code examples can be found in OpenAI's documentation and various community resources.

- Explanation of each step's function: Each step is crucial for a functioning voice assistant; speech-to-text captures the user's input, NLP interprets it, and text-to-speech delivers the response.

- Troubleshooting tips: Carefully review API documentation and error messages for debugging assistance.

Advanced Features and Customization Options

Once you have a basic voice assistant working, you can expand its capabilities significantly:

-

Integrating with external services: Connect your assistant to services like calendars, weather APIs, email, and more, enhancing its functionality and usefulness. This can involve using APIs provided by these services to fetch and process information.

-

Personalizing the assistant's personality and responses: Fine-tune the language model's behavior to create a unique personality for your assistant. This could involve training on custom datasets or using prompt engineering techniques.

-

Adding context awareness and memory: Equip your assistant with the ability to remember past interactions, allowing it to maintain context and provide more relevant responses over time. This often involves sophisticated dialogue management techniques.

-

Training custom models for specific tasks or domains: If you need a voice assistant specialized for a particular industry or task, you can fine-tune OpenAI models with domain-specific data to improve accuracy and performance.

-

Examples of advanced features: Consider features like setting reminders, answering complex questions, providing personalized recommendations, or controlling smart home devices.

-

Links to relevant documentation: Refer to OpenAI's comprehensive documentation for more information on API usage, model selection, and advanced features.

-

Discussion of potential limitations and challenges: Advanced features often involve more complex coding, and resource management (cost and speed) can be a consideration.

Choosing the Right OpenAI Model for Your Voice Assistant

Selecting the appropriate OpenAI model is crucial for optimizing performance and cost. Several factors influence this decision:

- Cost: Larger models typically offer superior performance but come at a higher price.

- Performance: The model's accuracy in understanding and generating responses will influence the quality of your voice assistant.

- Accuracy: The model's ability to correctly interpret speech and generate appropriate responses directly impacts user experience.

Here's a comparison of some models:

-

GPT-3 (various versions): Offers a good balance between cost and performance for many voice assistant applications.

-

GPT-4: Provides improved performance and accuracy over GPT-3 but at a higher cost. Suitable for more complex tasks and demanding applications.

-

Whisper (various sizes): Choose a Whisper size that aligns with your accuracy needs and available resources. Larger models offer better accuracy but are more computationally expensive.

-

Model name and capabilities: Each model is documented thoroughly on the OpenAI website.

-

Pros and cons of each model: Weigh the strengths and weaknesses before selecting a model.

-

Examples of use cases: Identify which models best fit the complexity of your voice assistant's intended functions.

Conclusion

OpenAI's innovative tools have significantly lowered the barrier to entry for developing voice assistants. By following the steps outlined above, you can create your own voice assistant, incorporating advanced features and customization options. Whether you're a seasoned developer or just starting out, OpenAI empowers you to build sophisticated voice assistants easily and efficiently. Start building your voice assistant with OpenAI's powerful tools today and experience the future of voice technology!

Featured Posts

-

Ella Travolta Slicnost S Ocem I Put Do Zrelosti

Apr 24, 2025

Ella Travolta Slicnost S Ocem I Put Do Zrelosti

Apr 24, 2025 -

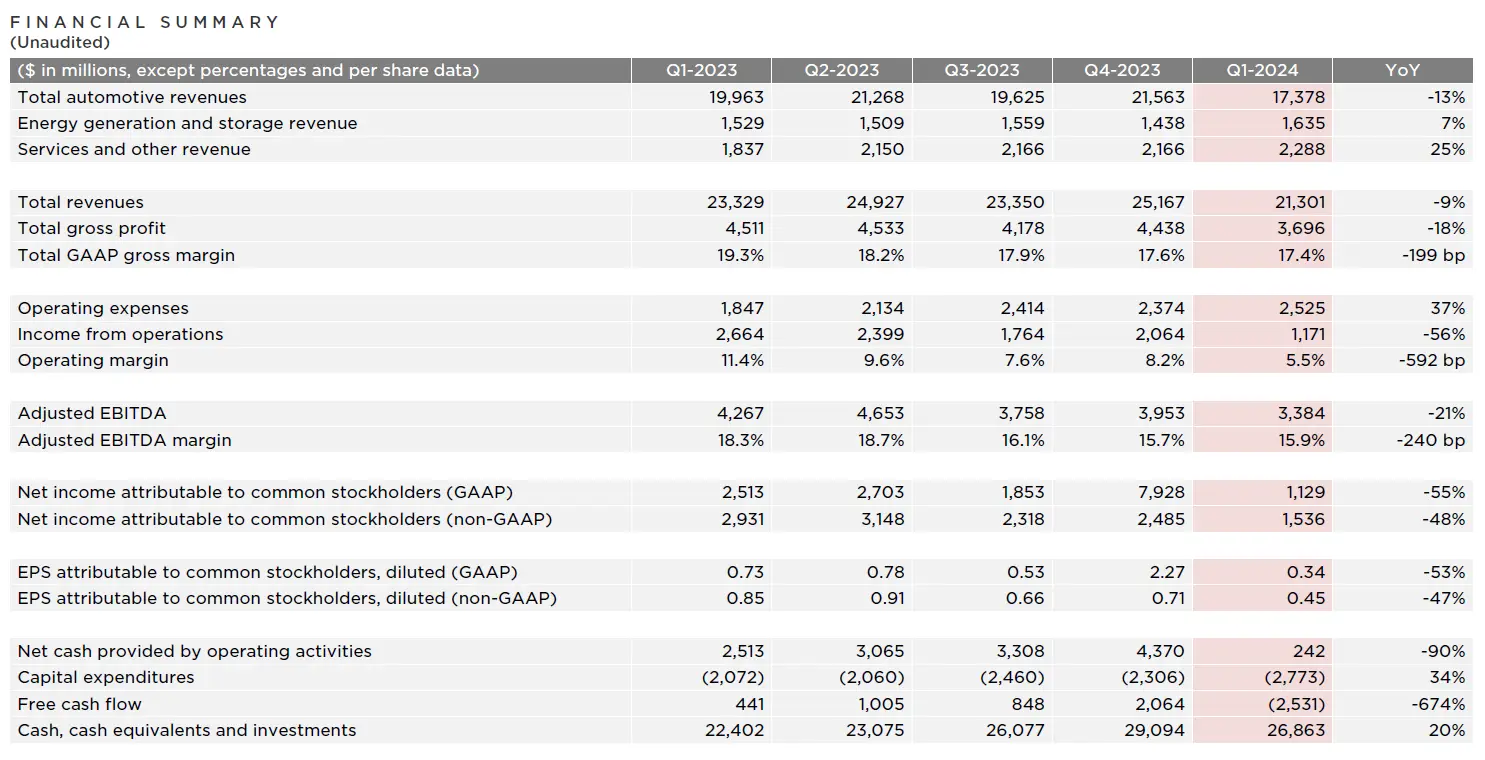

Sharp Fall In Teslas Q1 Profit Analyzing The Musk Factor

Apr 24, 2025

Sharp Fall In Teslas Q1 Profit Analyzing The Musk Factor

Apr 24, 2025 -

Google Fi 35 Unlimited Plan A Detailed Review

Apr 24, 2025

Google Fi 35 Unlimited Plan A Detailed Review

Apr 24, 2025 -

Canadian Dollar Plunges Despite Us Dollar Gains

Apr 24, 2025

Canadian Dollar Plunges Despite Us Dollar Gains

Apr 24, 2025 -

Cantor Fitzgerald In Talks For 3 Billion Crypto Spac With Tether And Soft Bank

Apr 24, 2025

Cantor Fitzgerald In Talks For 3 Billion Crypto Spac With Tether And Soft Bank

Apr 24, 2025